In part 1 of this article, I outlined how a data steward – a person who is responsible for data quality and typically not on the IT staff – would create a collection of rules that can be used to automate data cleansing and address verification. In this post, I will outline how IT can automate that process and incorporate changing business rules on the fly and without the need to change code. You should find this about as easy as I find falling when I go skiing – and the records of various Colorado high country emergency rooms and ski patrols will tell you that I find falling very easy indeed.

There is a great deal of overlap between DQS and Master Data Services (MDS), particularly when dealing with address data. For the processes of master data management and managing data quality there are two issues we can address (pun intended) with DQS:

- Is this address properly formatted?

- Does this address exist?

The question of “is this the correct address for this person?” is addressed using MDS.

Consider, for example this address:

Barack Obama

1600 Pennsylvania Avenue NW

Washington, DC 20500

If the question is “Does Barack Obama live at 1600 Pennsylvania Avenue?” use MDS; if the questions are “Is this a valid address?” or “Is this address properly formatted?” use DQS.

Data Cleaning

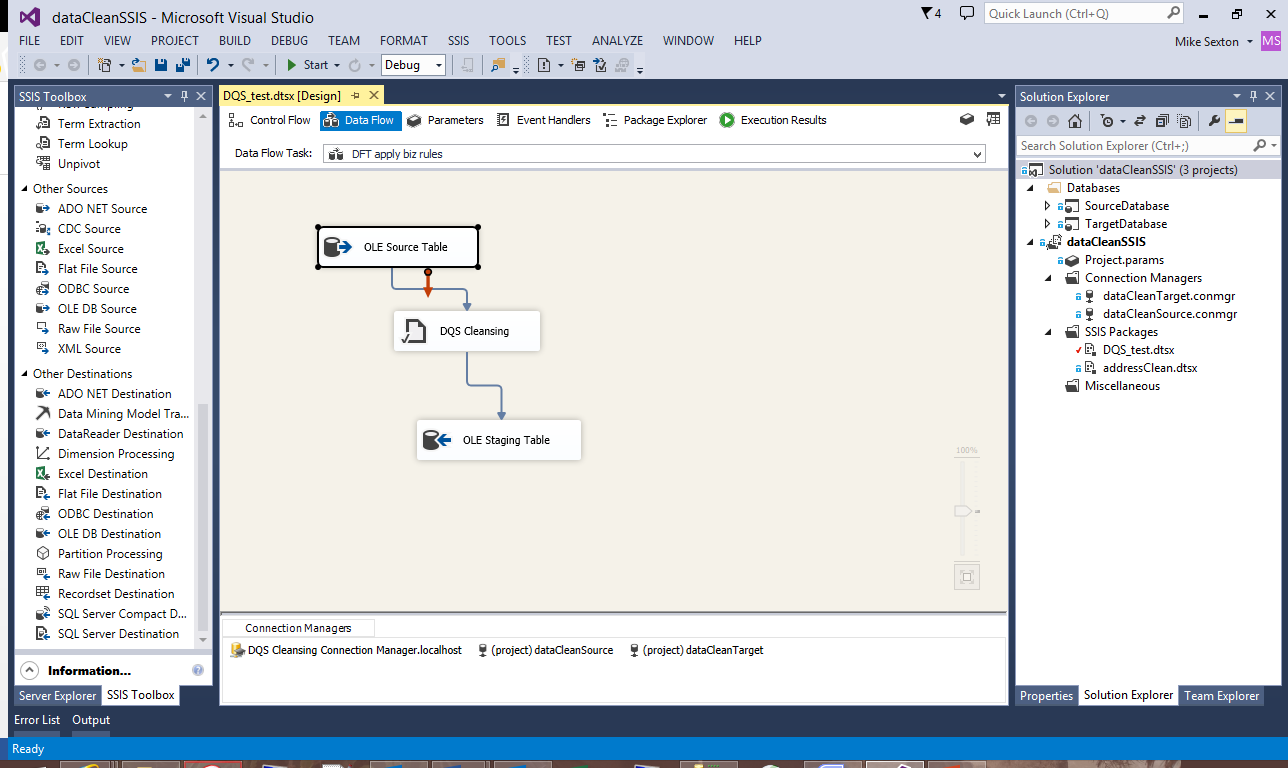

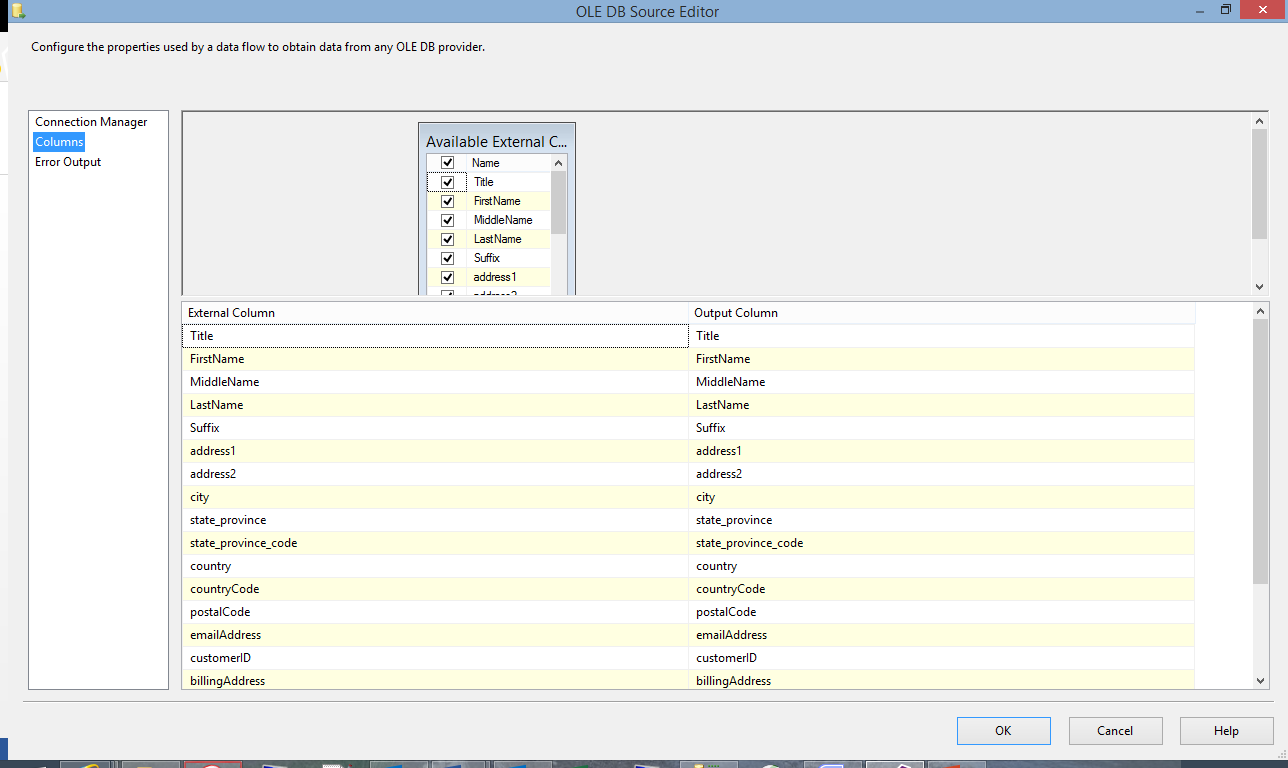

For purposes of this post, I’ll assume you, fellow traveler, are familiar with the basics of SSIS. DQS is used as a transformation within a data flow task. To start, set up your connections to your source and target databases and set up your data source. In this case I set up an OLE DB source and, for the first data flow, pointed it at a demo table I set up holding address data to clean and validate.

Note that there are fields to be moved that are not part of any address validation process.

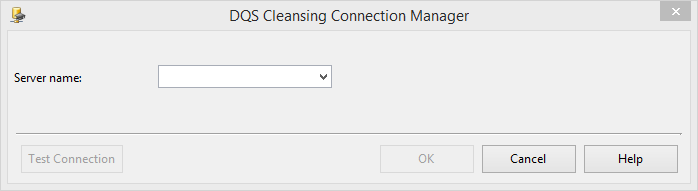

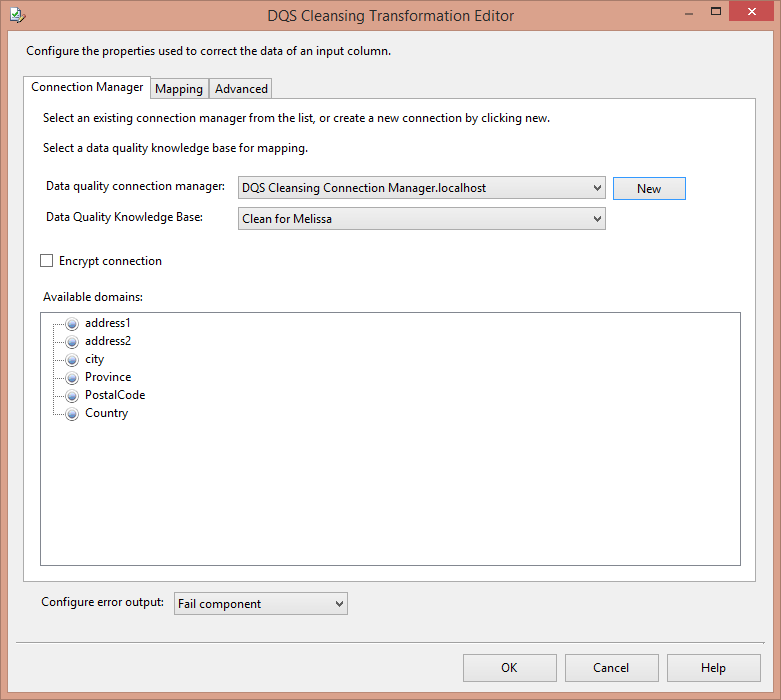

Once you have your data source set up, drag a DQS Cleansing transformation into the data flow and link it to your source. You will need to connect to the DQS Server – the SQL Server installation where the three “DQS_” databases have been installed. You will not need to specify the catalog, as the object knows to use these databases. You will also note that there is no place for user names or passwords. You will be using windows authentication only. When a SSIS package is deployed to production, the security context that it will be run in (e.g. via SQL Server Agent jobs) will need read and write access to the DQS_ databases.

Once the connection is established, select a knowledge base that contains the rules you need to apply to this step. Here we will be cleaning data before sending it for address verification so I have selected “Clean for Melissa”. Since MelissaData charges on a per address basis, it is simply a waste of money to send data you know is incorrect. In the prior article you will recall we found a typo for a city name. The data contained a record for a city called “Pnot-Rouge”, a misspelling of the city of Pont-Rouge, Quebec.

Note when we connect to the DQS server in the DQS Cleansing transformation the domains that were set up in part 1 of this series appear.

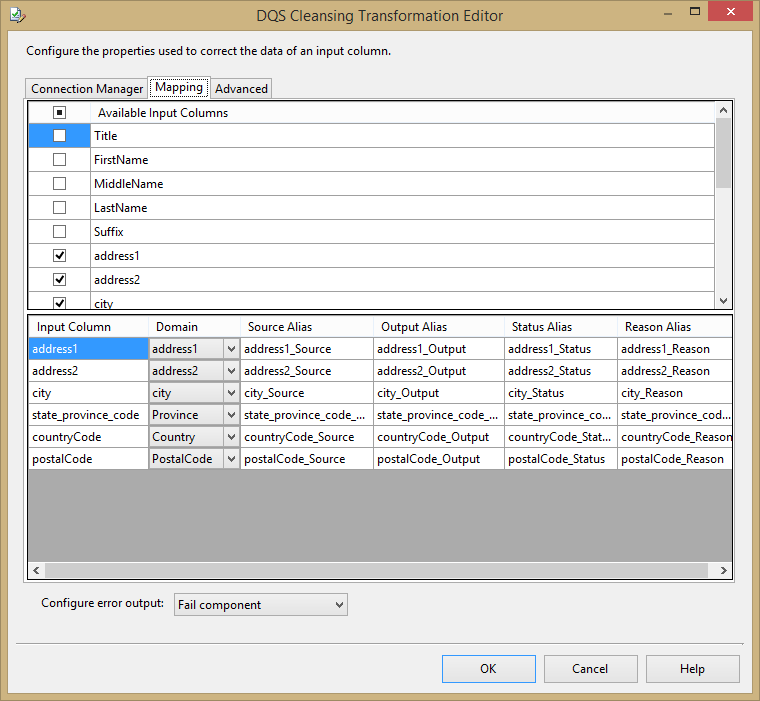

For purposes of this post, I will ignore the “advanced” tab. Your next step would be to map the fields in your data source with the relevant domain, the set of rules you want applied to this data.

I have selected only the address data and mapped each field to the relevant domain. The transformation editor automatically populates the source, output, status, confidence and reason alias fields as shown. There will be more on these fields when we look at the MelissaData results.

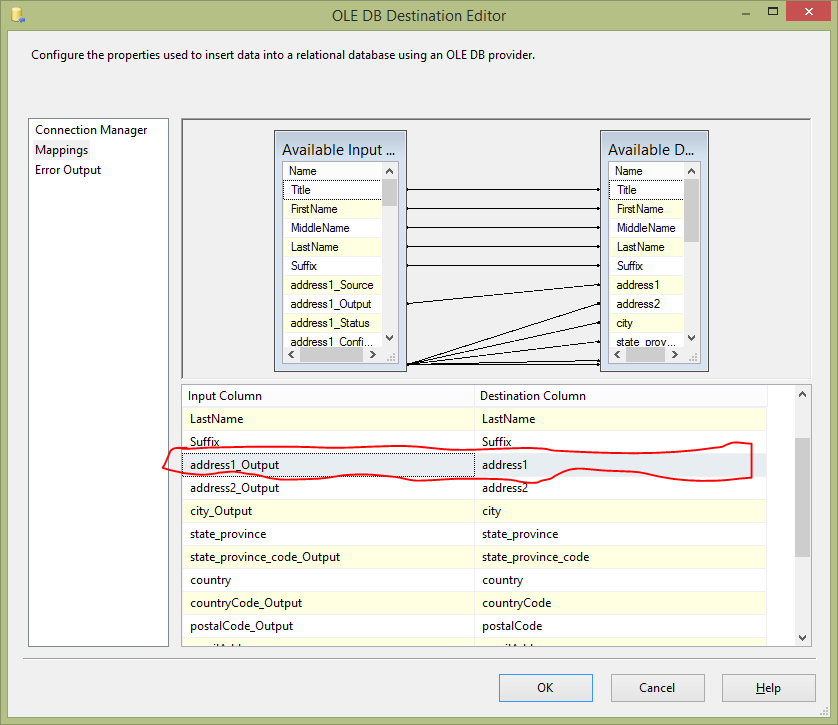

Our target in this case is a staging table, used as temporary storage prior to sending the data to MelissaData. You could, of course, chain the cleansing and validation DQS transforms. I have just set things up this way for clarity for discussing this demo at a SQL Saturday event. The DQS transform has renamed the original columns “_Source” and created the “_Output” columns to hold the corrected data. You will map the “_Output” column to the appropriate destination field. That is, after all, the whole point of this exercise, though for audit purposes you may want to have “before” and “after” records in your MDM system.

Data Validation

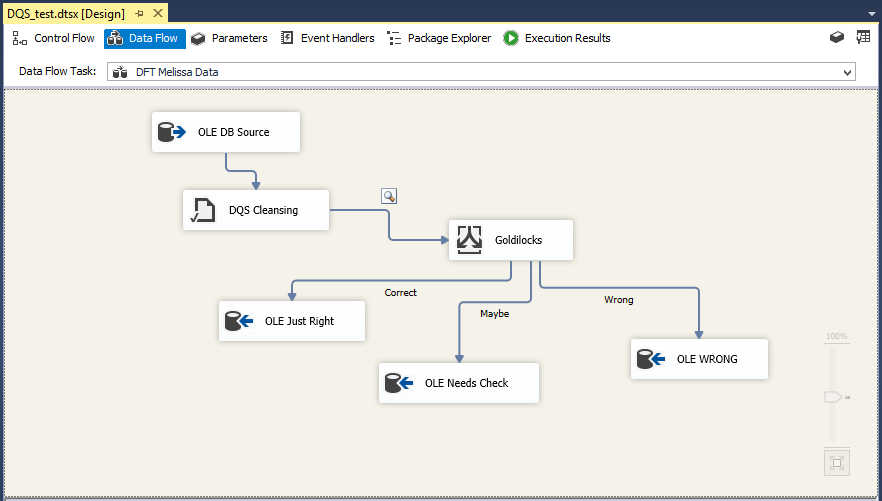

Once the data has been cleaned the addresses need to be validated. In part 1 of this series the third party data validation was set up with MelissaData’s Address Check through the Windows Azure store. Here we will automate the use of that reference data.

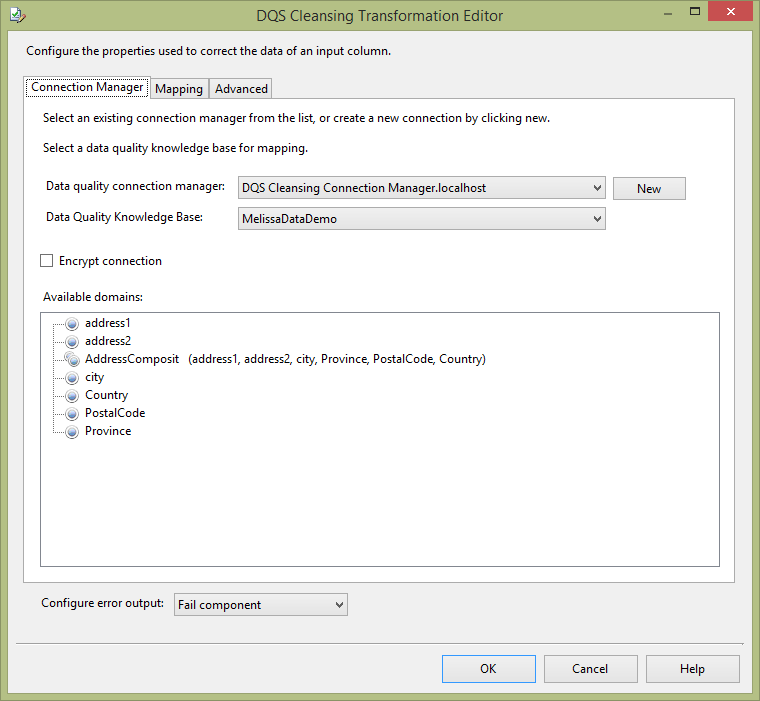

Once again drag a data flow task into your control flow and set up a data source. In this case use the staging table we populated in the in the prior step. Again, drag a DQS Cleansing transformation into the data flow and connect it to the source. Use the same data quality connection manager created in the prior step, however, in this case we will be using the MelissaDataDemo knowledge base. Notice that the domains and the composite domain are all available.

When you map the source columns to the appropriate domains, you will not use the composite domain, even though the reference data rules are at the composite level. Instead you will map to the individual domains. The individual domains will inherit the reference data from the composite. This always struck me as a bit backward for inheritance as it goes from the lowest level, specific object to the more generic object. I think of it as my kid hitting the big time financially and setting me up for life, as opposed to him waiting for me to die to inherit my money.

This transform will take the data, pass it through to the composite domain we created in part 1, and validate the address data against the MelissaData reference data.

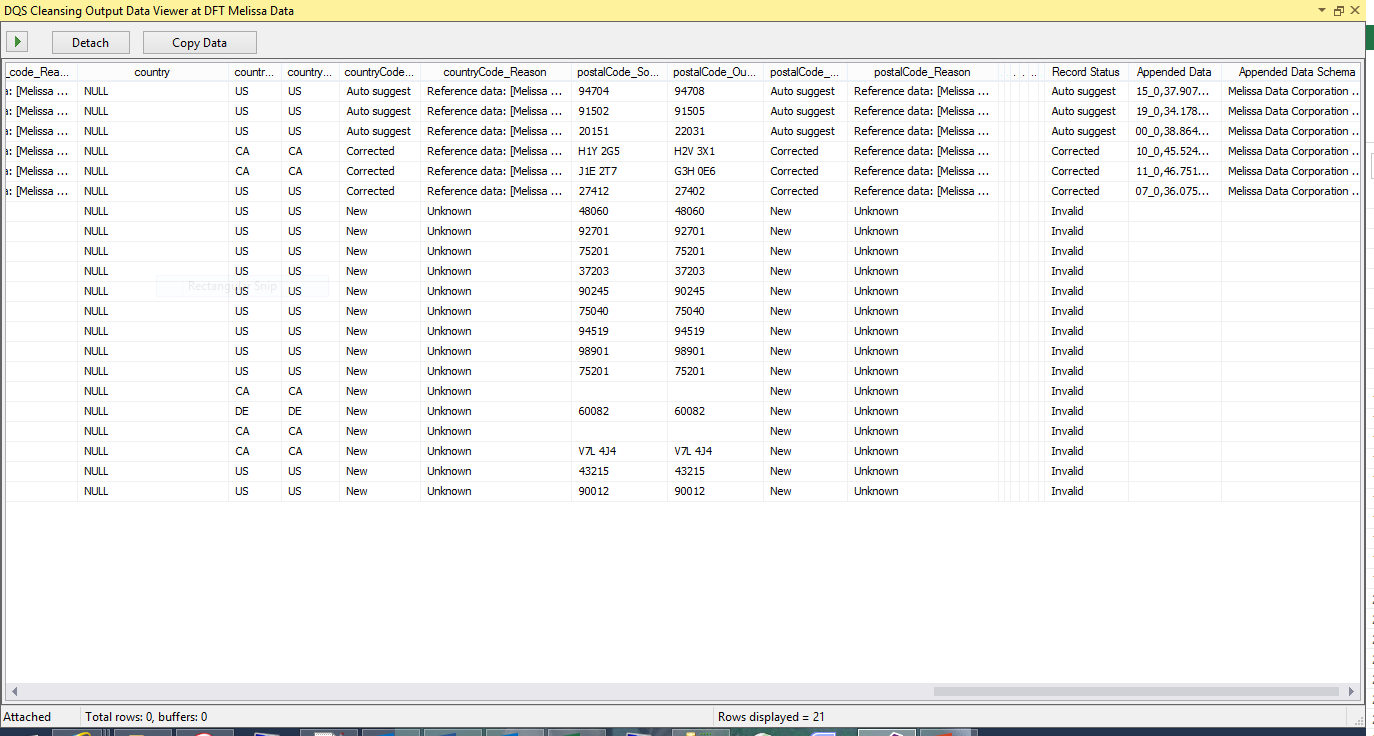

I have added a data viewer to the data flow.

The record set itself is rather wide, so it is not possible to show all of the enrichment that MelissaData provides, however, there are several items to note. First, MelissaData has augmented the data by adding geocodes to the record. Second, you can see that we get back corrected addresses, suggested changes and invalid records.

We can use the record status to direct data to appropriate repositories or work flows. Some of this data can be deemed good, so we can simply send it to the appropriate gold record. Some of this data may be good, but would require human review to validate the suggested change from MelissaData. It must be emphasized that the human doing the review does not work for IT. Instead this person works in the area responsible for the data itself. Since this is customer data, that might be sales support, order fulfillment or billing. Some of this data will be invalid and needs to be sent back for correction to the appropriate business entity (e.g. sales). To that end, best practice is to put a conditional split into the data flow and send the data to the appropriate repository for whatever further action is required, as shown below.

Conclusion

DQS is a powerful tool that allows for easy, automated application of ever evolving business rules without the need to involve technical professionals every time a single rule changes or some correction to data needs to be applied. This tool logically divides the work into areas of expertise. Technologists can automate the rules and keep the lights on, then are freed up to focus on other, typically more interesting tasks. The business, the people who know best what the data should be, can create and modify business rules that are applied to the data automatically, without the long waits required to turn service tickets or projects around.